You can not select more than 25 topics

Topics must start with a letter or number, can include dashes ('-') and can be up to 35 characters long.

3.1 KiB

3.1 KiB

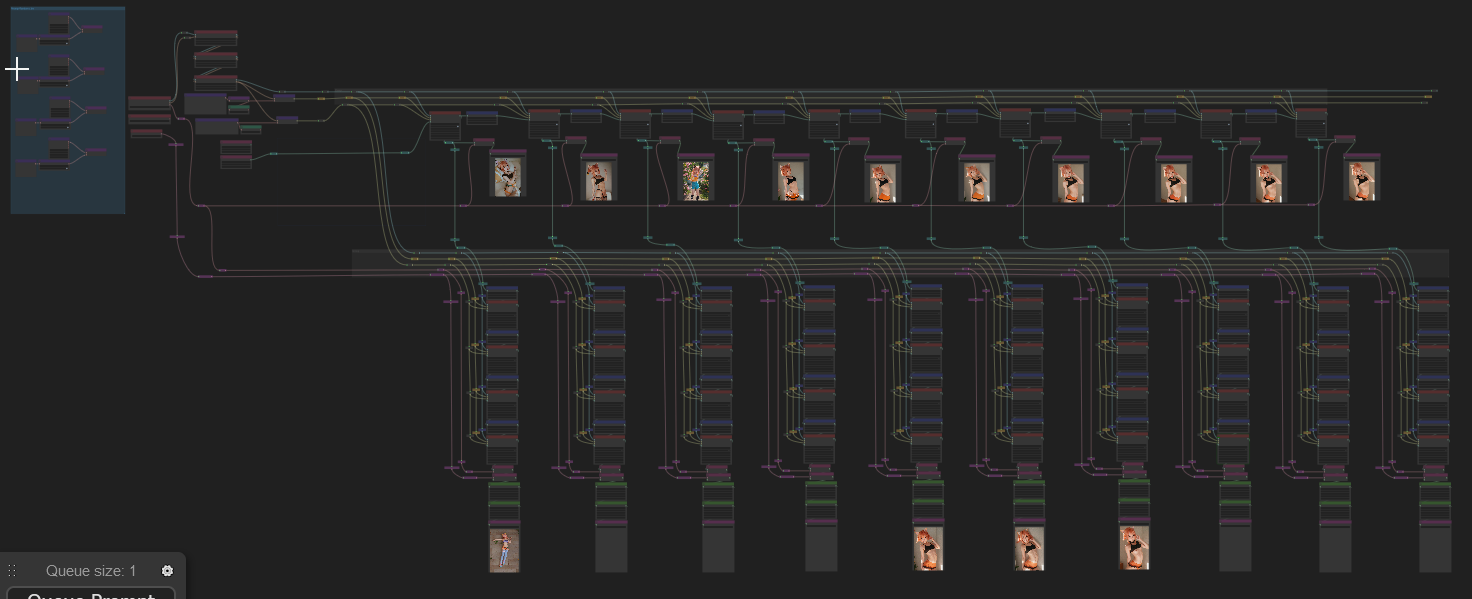

wyrde's horribly complicated multi latent hirez fixing and scaling

Makes extensive use of WAS nodes.

- Install WAS suit and avoid a mess of red boxes.

The Principle of the Thing

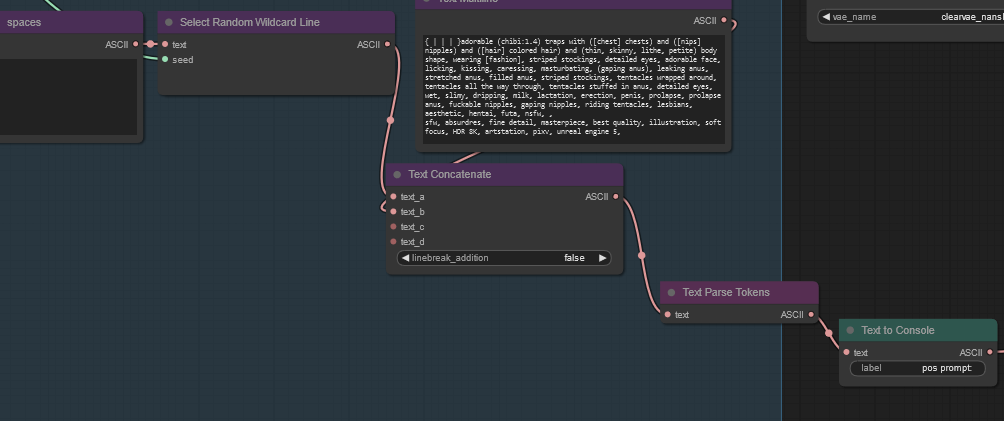

WAS was kind enough to write a nifty node that allows tokens to be set via ascii/text nodes. This hugely simplified the random prompt generation in the previous workflow.

So, of course, I had to make it more complicated.

This workflow

- generate a series of images which SD repeatedly samples.

- they tend to improve slowly, but sometimes have wild changes

- the images are then hi-rez fixed and upscaled

- Uses a prompt with a high degree of randomness

- figure, hair, ears, & clothing. More elements can be randomized with these as examples.

- values are picked from a list, then assigned to a token. The tokens can then be evaluated in other areas.

- Like before, I wanted all the prompt data to be shared with the image.

- In comfyui, words in {curly braces | separated by | pipes are | used to | generate} random results. Due to the way comfyui functions, an image's workflow will contain only the items in the prompt which were evaluated for the image. Other random elements will be dropped.

The workflow

- Generates a text prompt from several randomized lists

- the sections are assigned to tokens.

- the prompt is sampled and tokens evaluated

- sampled again

- and again -- 10 times in all.

- The resulting samples are then hi-rez fixed and upscaled.

The entire process takes around 30 mins on my 1060gtx

Includes some lora. There's also a places where moving noodles changes the generation.

Versions

- v1.0 - it's a mess, yo

- v1.1 - added img2img nodes, cleaned up lines, better grouping

Example Results

Eventually, I'm tired.

Special Note

Because of how the backend evaluates the text boxes, it doesn't know the contents of the tokens have changed when parsing the prompts. There's two ways to fix this:

- put

{ | | }in the prompt. It will evaluate the space each time and run the prompt, thus also evaluating tokens. - make a new multiline node→random line node→text concatenate (the random result and the prompt) → text parse tokens → text to conditioning

- this is more complex, but preserves the text prompt in the image workflow.

- this is more complex, but preserves the text prompt in the image workflow.

resources

I need to change these to match. Will do it tomorrow.

Model

Lora

- https://civitai.com/models/8858/maplestory2game-chibi-style-hn

- https://civitai.com/models/21670/astrobabes

- https://civitai.com/models/25803/battle-angels

Embeds

- EasyNegative https://civitai.com/models/7808/easynegative

- bad-hands-5 https://huggingface.co/yesyeahvh/bad-hands-5/tree/main